Good morning,

Last week’s edition of this newsletter stirred something in many of you. I got quite a bit of slightly worried reactions.

It was all about the trade-offs we are making in maximizing this new technology, effectively abandoning our last shreds of privacy in order to be able to play at this game. Selling our souls to a digital devil in exchange for convenience.

One of you hit me with this email:

“Dude, that is a BRUTAL email that almost makes me regret signing up for this newsletter. But also really impressive. Really well done. Great job capturing the nuance and danger of what we’re working with in a tangible (rather than futuristic dystopian potential) and immediate way. I’ll be sharing this with my friends.”

Those reactions are really fun to read . That “almost regret” is the reaction I live for—it means the drug hit the bloodstream. This reader saw the bars of the jail that is being constructed before his eyes, even if just for a flicker.

I also thought : ‘Huh ? Nuance ? It can get a lot worse than this , friend’.

So this week I’m going to push this reader right to the edge of the unsubscribe button.

Or in this case the “head in the sand”-button.

We need to address the dystopian potential of artificial intelligence. I think that’s an important and difficult conversation to have. (And - pinky promise - next week I’ll find something more optimistic to explore)

Ready ? Ok , let’s go.

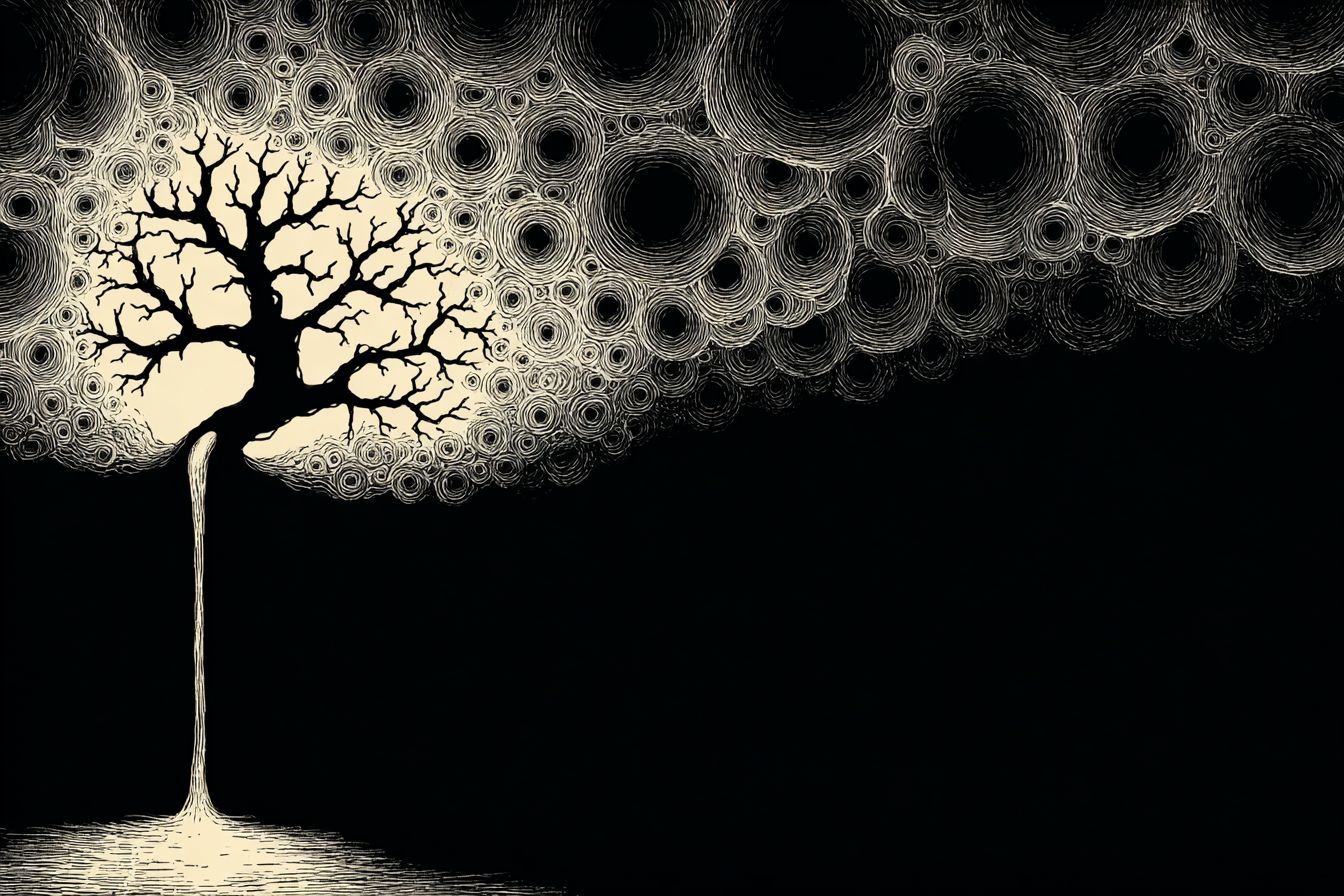

Allow me to drag Russian novelist Yevgeny Zamyatin into the room, the ghost of a man who saw us long before we saw ourselves. In the novel We, written in 1922, he wrote a scathing criticism of Stalin and laid out the architecture of a glass-walled society where every step was tracked, every thought monitored, every human reduced to a number in the state machine. This book was the main inspiration for “1984”. Zamyatin wasn’t just describing Stalin -he was prophesying Siri, Alexa, ChatGPT and Google Maps. He knew the real cage wouldn’t need iron bars. It would be built from transparency, efficiency, and the narcotic ease of “being managed”.

The plot twist here is that we didn’t stumble into this prison but we built it ourselves. The moment the “smartphone” slid into our palms, the deal was sealed. What looked like liberation will prove to be the shiniest set of handcuffs ever designed.

Since 2007, we’ve carried our prison wardens in our pockets -feeding them every location ping, every search query, every late-night text, every half-formed desire whispered into microphones we pretended weren’t listening. We didn’t just give away privacy but we uploaded our souls in real time. Zamyatin’s glass walls are glowing touchscreens, and we tap them compulsively, like prisoners who fund and maintain their own cell. Oblivious to the outside world.

Social media proved the perfect precursor to artificial intelligence. Everything captured all at once and fed into the machine - where AI agents are now waiting to weaponize and ‘ultra-personalize’ this data.

Meanwhile, the high priests at the World Economic Forum in Davos smile as they chant their gospel: “you will own nothing and you will be happy”, their agenda pushed by a carefully selected set of politicians. You don’t own music , you rent it. Same with films, software, houses and cars. The 1% own everything - the rest is lucky they are allowed to use it.

The digital euro and the digital dollar are waiting backstage- both in the works. State-backed “stablecoins” are ready to abolish cash, the last anonymous refuge of a free human being. Cameras bloom like fungus across the cities, every angle covered, every face catalogued. And all of it justified by convenience, efficiency and above all : security.

Don’t you want to be safe?

Not militarized control like in “1984” or “We” but ease-of-use and entertainment. The velvet fist of control wrapped so tight around your life that you thank it for the comfort.

AI as the great catalyst for age-old political agendas. Finally , here’s the tool that has the potential to subdue us all. It’s what ‘they’ have always wanted.

And in the meanwhile we’re not resisting. We’re applauding. In an ironic twist - I might actually be contributing to that myself by teaching people about AI.

Every “helpful” autofill or ChatGPT answer, every eerily perfect recommendation, every moment the machine finishes our thought before we do—it’s another brick in the wall we mistake for progress.

Zamyatin was screaming at us from 1922: don’t build the glass house and especially don’t let them make transparency your religion. But here we are, nodding along. Mistaking captivity for convenience.

Soon there will come a time where dissent or criticism is impossible. You dare to question us, you dare to criticize us ? Let’s see how you fare without your bank account. If you’ve been paying attenttion to what is happening in the UK, you see that governments are absolutely willing to do everything to keep their citizens from revolt. Tweet something incendiary and you’re off to jail. Few years ago some Canadian truckers revolted against their government and their bank accounts were frozen.

This is a small taste of where we are headed.

So yes, if you thought last week was brutal. This one is even more so. It has to be.

Because the prison is slowly but steadily being built. The cameras are already blinking awake on every corner.

Look up now, before the last scrap of sky vanishes.

And remember: Zamyatin tried to warn us more than 100 years ago.

We just thought he was writing fiction.

Training cutting edge AI? Unlock the data advantage today.

If you’re building or fine-tuning generative AI models, this guide is your shortcut to smarter AI model training. Learn how Shutterstock’s multimodal datasets—grounded in measurable user behavior—can help you reduce legal risk, boost creative diversity, and improve model reliability.

Inside, you’ll uncover why scraped data and aesthetic proxies often fall short—and how to use clustering methods and semantic evaluation to refine your dataset and your outputs. Designed for AI leaders, product teams, and ML engineers, this guide walks through how to identify refinement-worthy data, align with generative preferences, and validate progress with confidence.

Whether you're optimizing alignment, output quality, or time-to-value, this playbook gives you a data advantage. Download the guide and train your models with data built for performance.

AI News

OpenAI is launching a jobs platform to connect businesses with AI-skilled workers, along with a certification program to boost AI fluency across the U.S. The goal is to certify 10 million Americans by 2030, with programs developed in partnership with companies like Walmart and built into ChatGPT. The move puts OpenAI in direct competition with LinkedIn, expanding its role from job disruptor to workforce builder.

Chinese AI startup DeepSeek is developing a new agentic model that can perform complex tasks autonomously and learn from its actions, aiming for release by the end of 2025. The project follows a quieter period for DeepSeek, despite growing competition from aggressive Chinese labs like Tencent and Alibaba. If successful, the model could help revive momentum in a year where AI agents have struggled to meet high expectations.

Google DeepMind released EmbeddingGemma, a lightweight open-source model that runs on devices like phones and laptops to power offline search and language understanding in 100+ languages. It’s fast, uses little memory, and prioritizes privacy by keeping user data on-device. As demand grows for private, on-device AI tools, models like this could become a standard building block for future assistants and search apps.

OpenAI researchers say AI models hallucinate because they’re trained to reward confident guessing, not honesty — and propose fixing that by punishing wrong answers more than uncertainty. Their study found models often confidently invent facts instead of admitting “I don’t know,” especially when asked for specific details like birthdays or dissertations. The proposed fix could lead to more reliable AI, especially in high-stakes tasks where accuracy matters more than boldness.

Anthropic has agreed to pay at least $1.5 billion to settle a lawsuit over using pirated books to train its Claude AI, setting the first major financial precedent for copyright disputes in AI. The deal covers about 500,000 books at $3,000 each, with Anthropic also required to delete all infringing data. While the company avoids a bigger legal battle, this ruling applies to piracy — not the broader question of using legally obtained texts under fair use.

OpenAI will start mass-producing its own AI chips in 2026 through a $10B partnership with Broadcom, aiming to cut costs and reduce dependence on Nvidia. The move mirrors efforts by Amazon, Google, and Meta to build custom chips amid global GPU shortages. Producing its own hardware gives OpenAI more control over scale and cost as demand for powerful models like GPT-5 continues to surge.

OpenAI is backing “Critterz,” a fully AI-assisted animated film aimed at proving that artificial intelligence can dramatically cut production time and cost in Hollywood. The film, created using GPT-5 and image models, is targeting a Cannes 2026 debut with a budget under $30 million — a fraction of the norm. While AI may help speed up animation, the real test will be whether audiences embrace a film openly built with AI tools.

A new study shows AI voice agents helped seniors monitor blood pressure more efficiently and cheaply, cutting costs by nearly 90% and earning high satisfaction scores. The agents handled thousands of calls, escalated health concerns, and successfully collected readings from patients with outdated records. The results point to a future where AI plays a larger role in elder care, especially when paired with tools that guide users visually and verbally.

AI users on X shared the prompts that transformed their workflows, highlighting creative ways to get smarter results by treating AI as a structured thinker. From building fake advisor panels to having AI write its own ideal prompts, the best submissions focused on methods that forced the model to plan deeply. The takeaway: better prompts unlock better thinking — and AI can help you write them.

MIT spinoff Alterego has unveiled a wearable device that interprets silent speech using AI, enabling users to communicate and control devices simply by thinking about speaking. The headset detects tiny facial muscle movements and converts them into commands, allowing for tasks like texting, searching, or talking with other wearers—without making a sound. It’s an early look at how human-AI interaction could evolve beyond voice or typing, without the need for invasive implants.

Microsoft is reportedly set to bring Anthropic’s Claude models into Office 365, signaling a major expansion beyond its exclusive OpenAI partnership. Sources say Claude 4 outperformed GPT-5 in office-related tasks like spreadsheet and slide creation, and Microsoft may access the models through AWS despite cloud rivalry. The move highlights Microsoft’s shift toward a multi-model AI strategy, focused on performance over partnership loyalty.

Anthropic’s Claude can now create and edit files like Excel sheets, Word docs, PowerPoints, and PDFs directly inside chat, expanding its role in everyday workplace tasks. The AI can generate reports, convert formats, and build slide decks or spreadsheets from raw input, available now to enterprise and team users. It marks a clear push to challenge OpenAI and Microsoft by turning Claude into a powerful office productivity assistant.

Quickfire News

Atlassian acquired The Browser Company for $610 million, aiming to enhance the Dia AI browser with enterprise-grade integrations and security features.

Warner Bros. filed a copyright lawsuit against Midjourney, accusing the company of using characters like Superman and Batman in AI-generated content without permission.

Microsoft announced new AI education initiatives at a White House task force meeting, offering free access to Copilot, educator grants, and AI courses on LinkedIn.

AI search company Exa raised $85 million in Series B funding, bringing its valuation to $700 million.

Lovable launched Voice Mode, a new feature powered by ElevenLabs that lets users code and build apps through voice commands.

xAI CFO Mike Liberatore departed the company, following recent exits by co-founder Igor Babuschkin and general counsel Robert Keele.

Alibaba launched Qwen3-Max, a 1 trillion+ parameter model that outperforms other Qwen3 variants, Kimi K2, Deepseek V3.1, and Claude Opus 4 (non-reasoning) on multiple benchmarks.

OpenAI disclosed it expects to spend $115 billion over the next four years on compute, data centers, and talent—an $80 billion increase from earlier estimates.

French AI company Mistral is reportedly raising $1.7 billion in a Series C round, which would bring its valuation to $11.7 billion, making it Europe’s most valuable startup.

Joanne Jang, head of model behavior at OpenAI, announced the formation of OAI Labs, a team focused on creating and testing new ways for humans to collaborate with AI.

Apple is facing a class action lawsuit from authors who claim the company trained its OpenELM language models on a pirated dataset of copyrighted books.

Anthropic publicly endorsed California’s SB 53 bill, which would require AI companies to publish safety frameworks and transparency reports.

Cognition raised $400 million in new funding, bringing its valuation to $10.2 billion following its recent acquisition of Windsurf.

Google introduced new educational tools in NotebookLM, including customizable flashcards, interactive quizzes, and redesigned reports for academic use.

Alibaba launched Qwen3-ASR, a multilingual speech recognition model that can process accents, singing, and interpret text prompts with high accuracy.

Google expanded AI Mode to support additional languages—Hindi, Indonesian, Japanese, Korean, and Brazilian Portuguese—for more localized search experiences.

Apple offered minimal AI updates at its hardware event, with Apple Intelligence features limited to live translation and new health monitoring tools.

ByteDance Seed launched Seedream 4.0, a 4K-capable image generation and editing model with multimodal features, competing with models like Nano Banana.

Baidu introduced ERNIE X1.1, a reasoning model approaching GPT-5 and Gemini 2.5 Pro in benchmarks, while significantly reducing hallucinations.

Eli Lilly released TuneLab, an AI drug discovery platform built on over $1 billion worth of the company’s proprietary research data.

Anthropic confirmed in a report that Claude had experienced response quality issues in recent weeks but stated it did not intentionally degrade performance.

Google upgraded its Veo 3 and Veo 3 Fast models with support for vertical video, 1080p HD, and a 50% price reduction.

Tencent open-sourced HunyuanImage 2.1, a high-quality image generation model with strong realism, prompt alignment, and in-image text rendering.

Meta signed a $140 million multi-year deal with Black Forest Labs to use its AI image technology, following its recent partnership with Midjourney.

Closing Thoughts

That’s it for us this week.